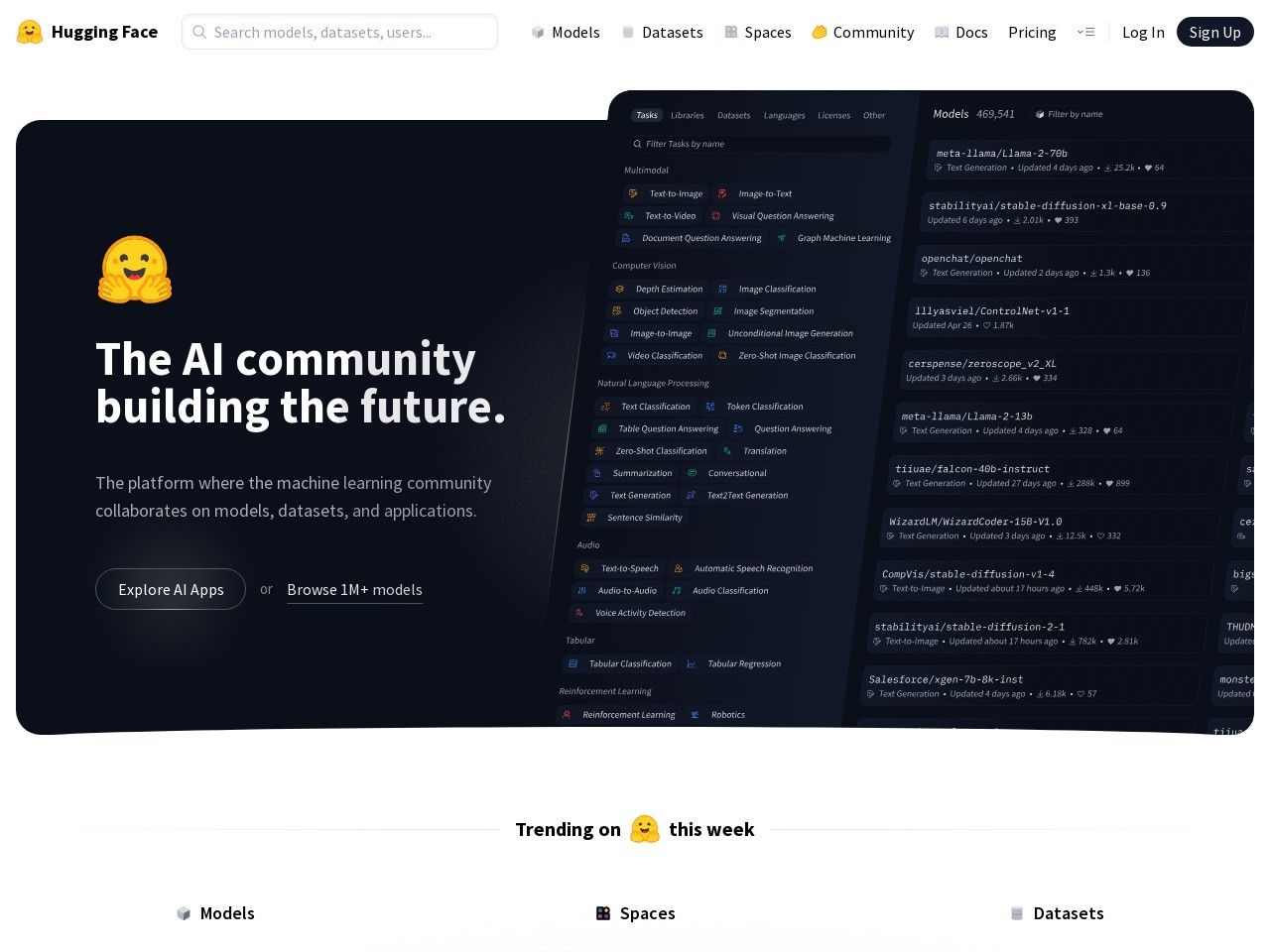

In today’s AI landscape, the development of open-source large language models (LLMs) is advancing rapidly. Hugging Face‘s Open LLM Leaderboard has become the authoritative platform for tracking and evaluating the performance of these models.

Website Introduction

Open LLM Leaderboard aims to provide researchers, developers, and AI enthusiasts with a transparent and comparable model evaluation platform, helping users understand the performance of different open-source LLMs.

Key Features

- Model Ranking and Evaluation: Conducts standardized benchmark tests to rank models, ensuring fair comparisons under identical conditions.

- Reproducibility Support: Offers detailed evaluation data and methods, allowing users to reproduce results using provided code and tools.

- Model Details: Displays input-output details, parameter sizes, and other information for each model, facilitating in-depth understanding.

- Community Collaboration and Submission: Users can submit their models for evaluation and engage in community discussions, report issues, or share insights.

- Data Access: Evaluation results are stored in Hugging Face’s datasets, available for users to download and analyze.

Related Projects

Open LLM Leaderboard is based on Eleuther AI’s LM Evaluation Harness framework, ensuring scientific rigor and fairness in evaluations.

Advantages

Since its launch, Open LLM Leaderboard has attracted over 2 million unique visitors, with approximately 300,000 community members participating monthly, receiving widespread acclaim.

Pricing

The platform is entirely free, allowing users to access and utilize all its features without cost.

Summary

Founded in 2016 and headquartered in the United States, Hugging Face is dedicated to providing open-source AI tools and platforms. Through Open LLM Leaderboard, users can access the latest model evaluation data, advancing AI research and applications.

Relevant Navigation

HELM

提示工程指南

一码千言

Evidently AI

LensGo

OpenCompass