In today’s AI field, training large language models like ChatGPT typically requires massive computational resources and high costs. However, Microsoft‘s open-source tool DeepSpeed offers developers a low-cost and efficient solution.

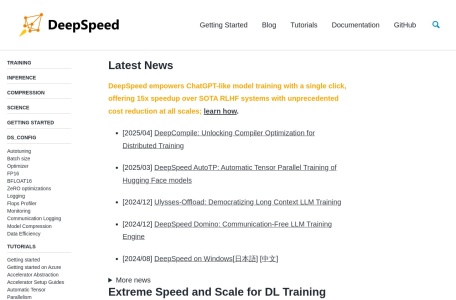

Website Introduction

DeepSpeed is an open-source deep learning optimization library by Microsoft, designed to reduce computational resources and memory usage, offering efficient distributed model training, especially suitable for large-scale language model training.

Key Features

- Supports training and inference of dense or sparse models with billions or trillions of parameters.

- Achieves excellent system throughput and efficiently scales to thousands of GPUs.

- Enables training and inference on resource-constrained GPU systems.

- Achieves unprecedented low latency and high throughput for inference.

- Achieves extreme compression for unparalleled inference latency and model size reduction with low costs.

Related Projects

DeepSpeed supports the world’s most powerful language models, such as MT-530B and BLOOM. Its innovations include ZeRO, 3D-Parallelism, DeepSpeed-MoE, ZeRO-Infinity, etc., greatly improving the efficiency and ease of use of large-scale deep learning training.

Advantages

DeepSpeed provides an easy-to-use deep learning optimization software suite, making training and inference of large-scale models more efficient and economical. Its innovative parallelism technology and high-performance kernel optimizations enable developers to achieve excellent model performance with limited resources.

Pricing

DeepSpeed is an open-source project under the MIT license, allowing free use, including for commercial purposes.

Summary

Microsoft released DeepSpeed on May 18, 2020, dedicated to providing efficient deep learning optimization tools. Through these innovative features, users can train and deploy large-scale language models while reducing costs, meeting the needs of modern AI applications.

Relevant Navigation

Llama 3

Lobe

Stable Diffusion

文心大模型

讯飞星火

Gemma